WALL-E:

A robot that interacts through more natural forms of communication

Fall 2014

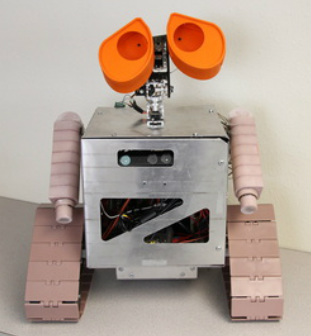

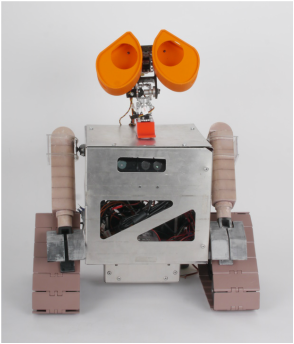

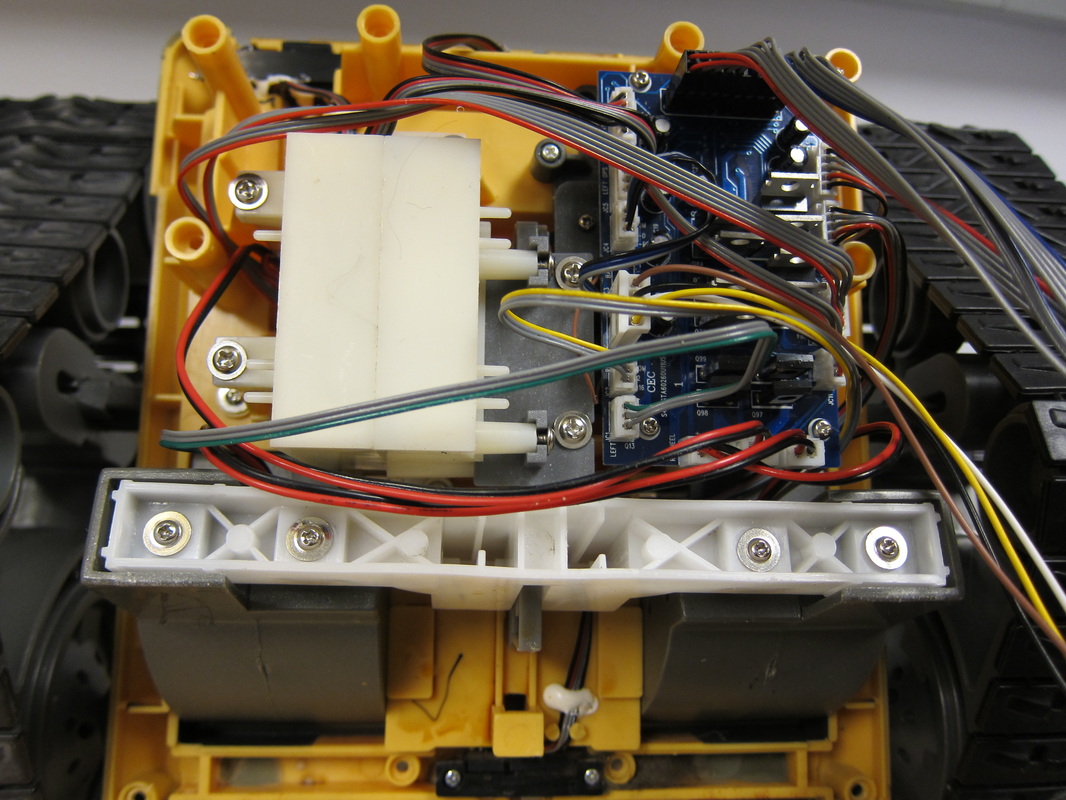

This semester marked the completion of my autonomous robot-- now possible that I'm not distracted by SCOPE and also because I integrated it into my Computational Robotics class! (Dealing with the Kinect and extracting data to do something that's interactive is pretty challenging.) The whole system runs off of two arduinos and a standard-issue Olin laptop (runnin Ubuntu 12.04). WALL-E has 7 DOF out of the 9 total working as of 12/18/2014. All code for switching behavioral states is in python, and all code modules communicate via ROS (the arduino uses rosserial and communication with the Kinect uses the OpenNI and NiTE binaries (which we found on a mirrored site since Apple has since bought the company, Primesense, that created all of the open-source code and creator of the first Kinect.

GitHub code is here: https://github.com/srli/wall-e

Final Report can be viewed here, and our final poster is here.

This semester marked the completion of my autonomous robot-- now possible that I'm not distracted by SCOPE and also because I integrated it into my Computational Robotics class! (Dealing with the Kinect and extracting data to do something that's interactive is pretty challenging.) The whole system runs off of two arduinos and a standard-issue Olin laptop (runnin Ubuntu 12.04). WALL-E has 7 DOF out of the 9 total working as of 12/18/2014. All code for switching behavioral states is in python, and all code modules communicate via ROS (the arduino uses rosserial and communication with the Kinect uses the OpenNI and NiTE binaries (which we found on a mirrored site since Apple has since bought the company, Primesense, that created all of the open-source code and creator of the first Kinect.

GitHub code is here: https://github.com/srli/wall-e

Final Report can be viewed here, and our final poster is here.

Here are some videos of our incremental progress from mid-November to mid-December 2014.

|

|

|

|

Fall 2013

This semester's goal is to make a functioning robot that can interact with humans autonomously. Stay tuned for updates!

This semester's goal is to make a functioning robot that can interact with humans autonomously. Stay tuned for updates!

Spring 2013: Olin Self Study

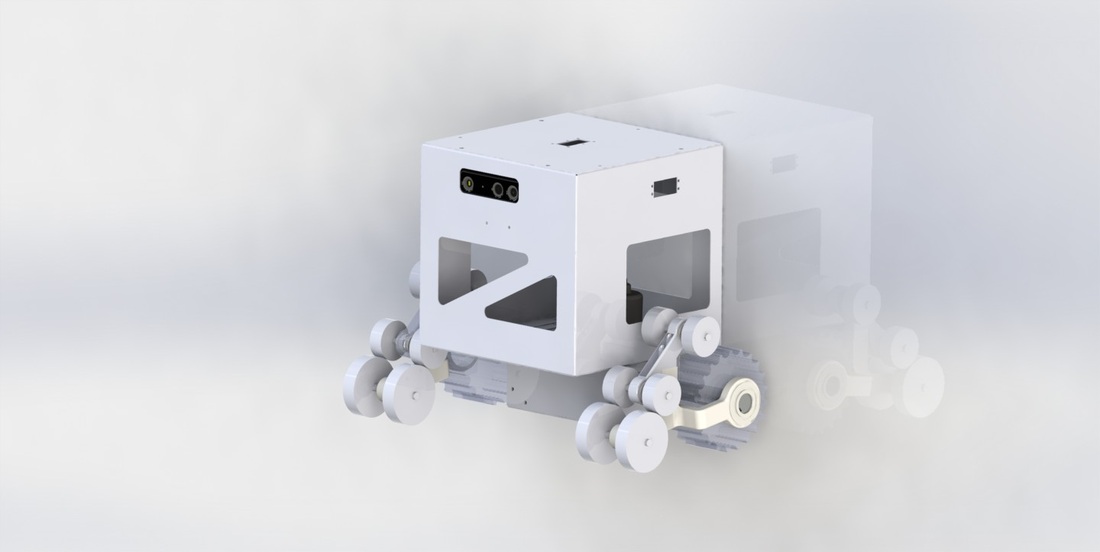

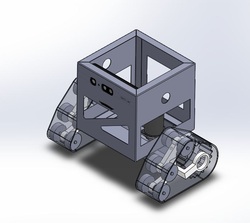

CAD model of Wall-E as of 3/9/13

As much as I wanted to build the ROV I had designed in my Mech Design class Fall 2012, Dave Barrett made me realize that if I didn't finish Wall-e soon, I'd graduate, and Wall-e would be a box of parts. Our marketing department (aka the OVAL tour guides) are always bringing tours by and looking up at our wall of posters, where a picture of the Ultimate Wall-E toy I hacked hangs. Excited prospies (prospective students) are always like "Wow! Someone built a Wall-E?" I heard that one day, and had to stop a tour and was like "It's not finished yet! It'll be cooler than that poster!"

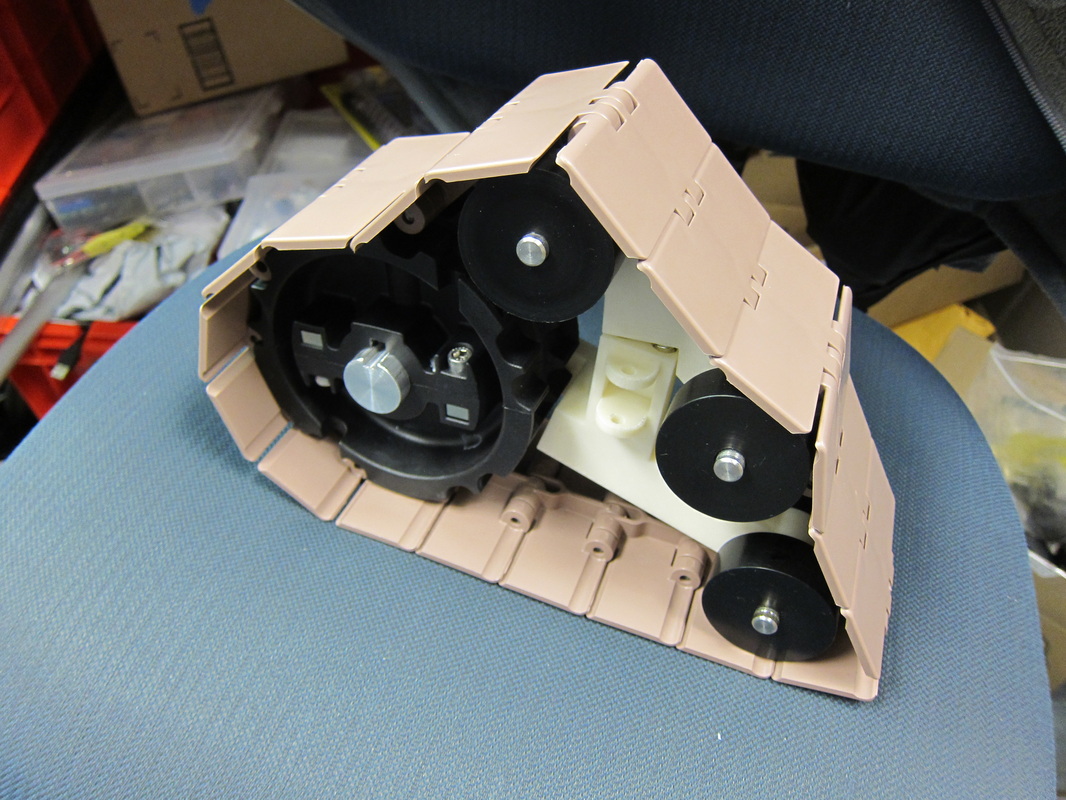

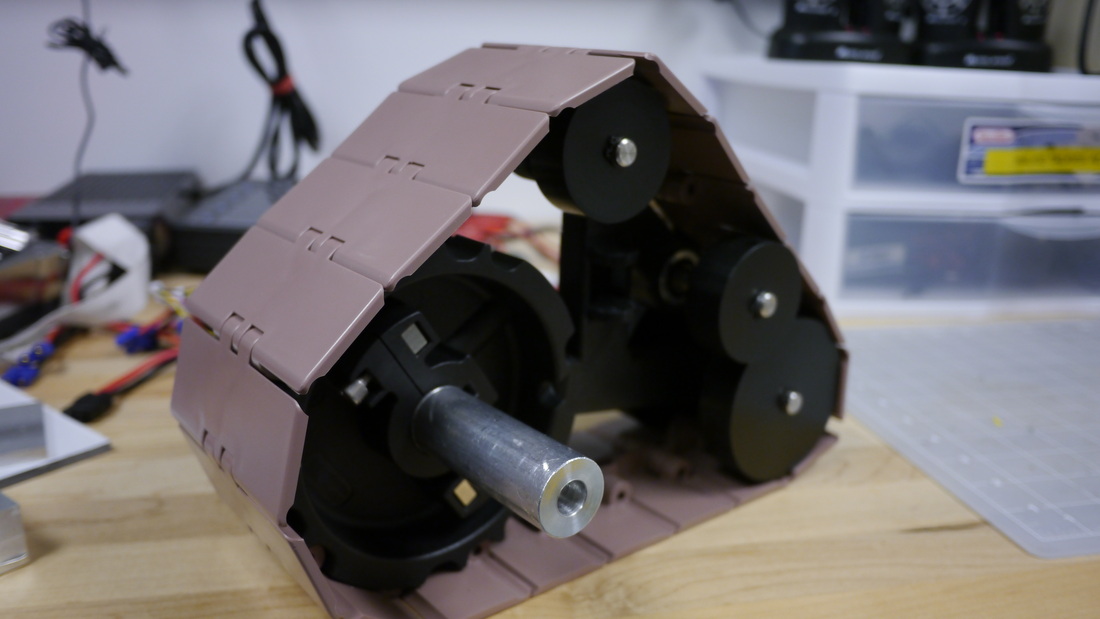

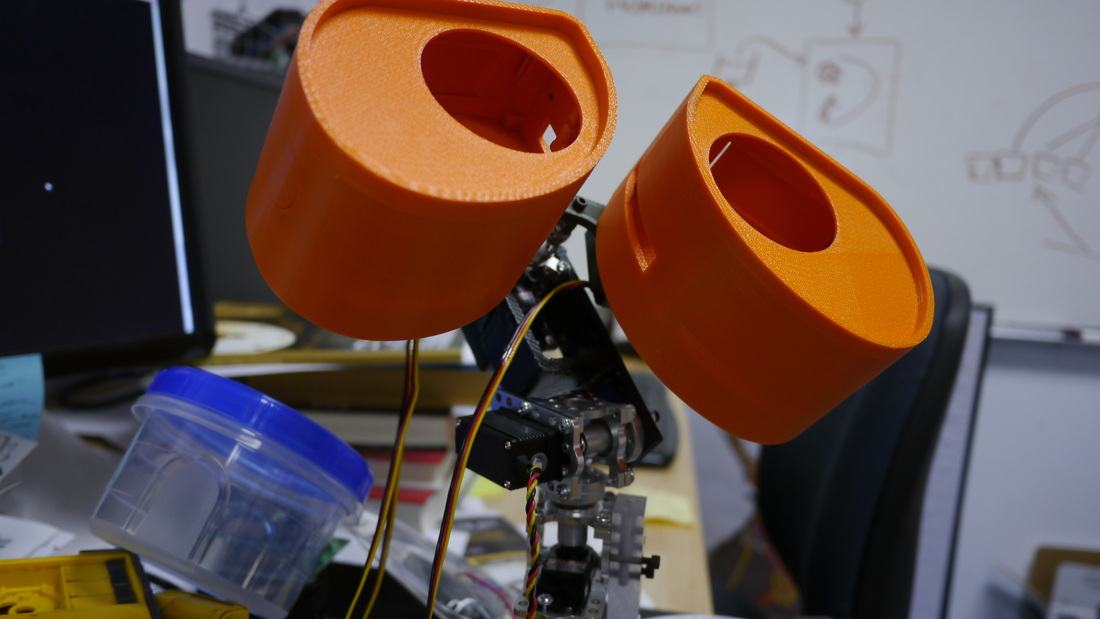

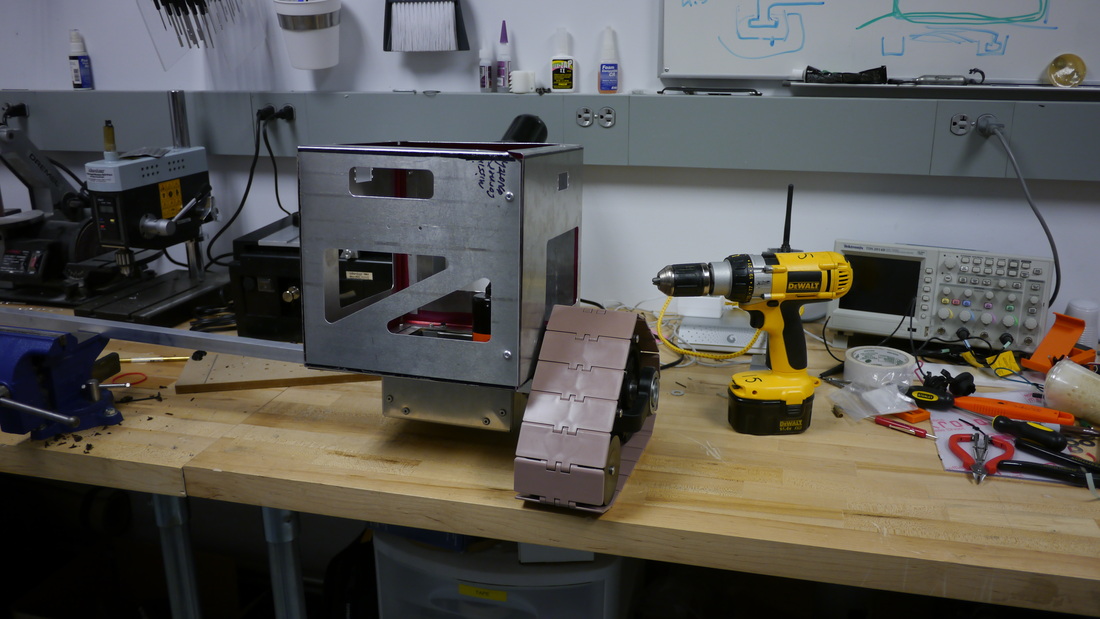

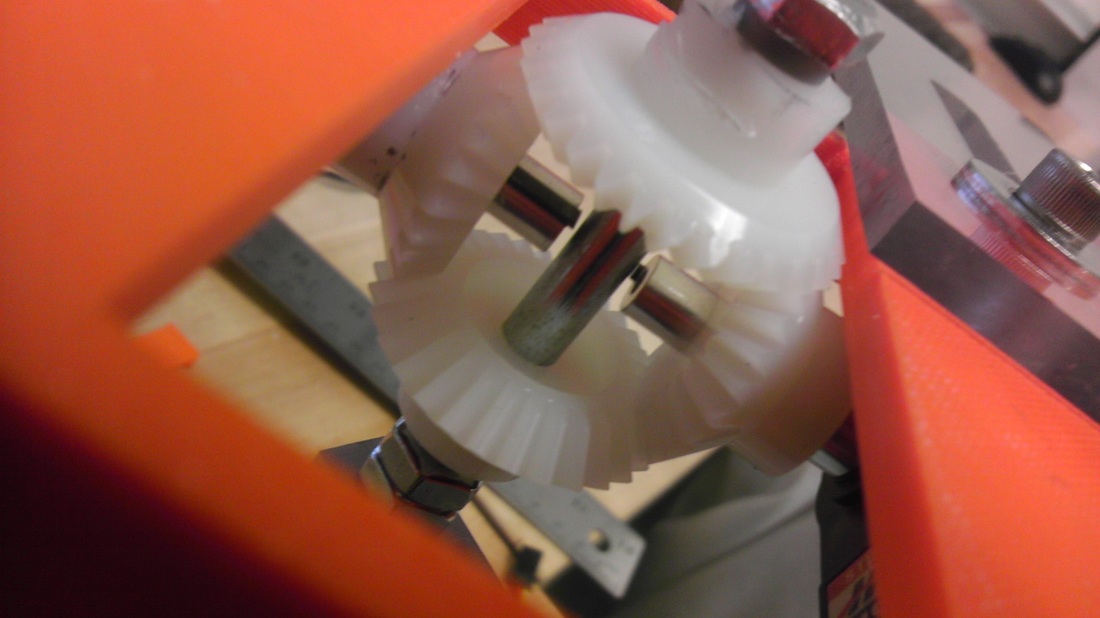

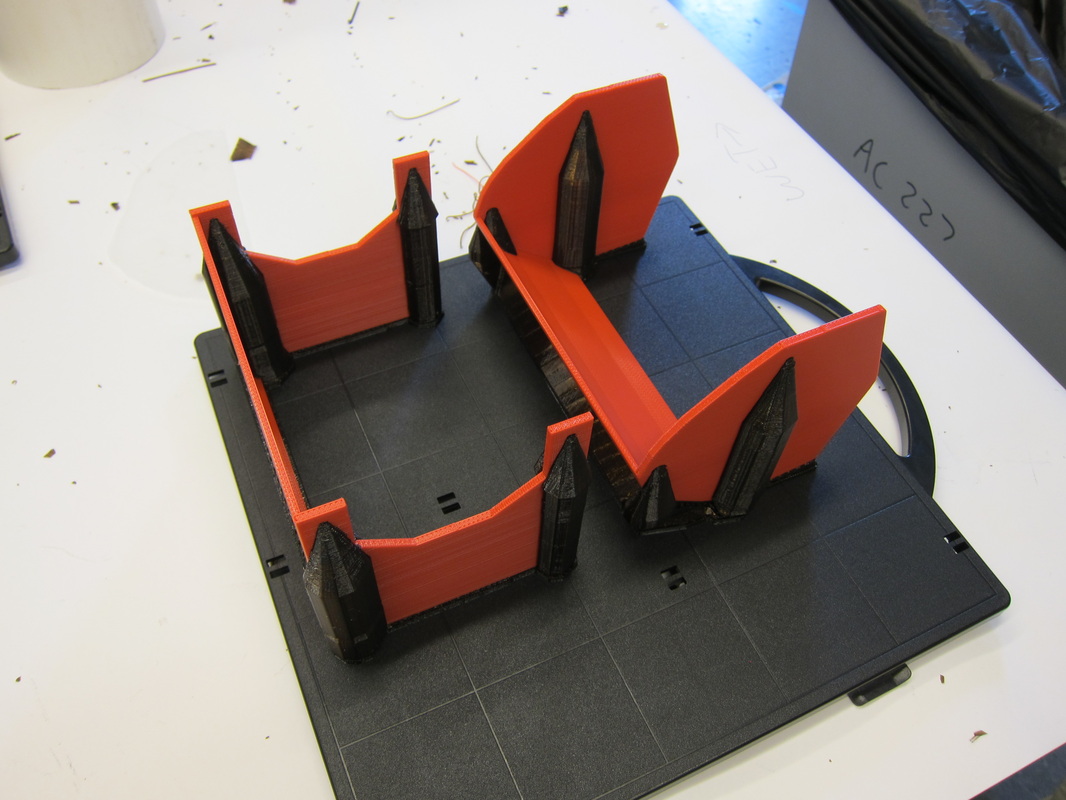

Couple of re-designs: Wall-e's head is entirely different now. I decided against the differential since hobby servos don't really provide great feedback on how fast they're moving (I'd have to upgrade to a nicer set of servos like Dongbu's Herculex) and have designed the new head around a typical pan-tilt mechanism made with aluminum servo mounts from ServoCity. Currently working on fabricating the chassis and frame to hold everything together so that I'll have all the hardware by the week after spring break and be able to start programming full body responses to human gestures. The drive train consists of a 3D printed frame with sprocket and plastic conveyor belt 820 chain from McMaster-Carr and some custom made delrin sprockets riding on Stainless 304 shaft.

Couple of re-designs: Wall-e's head is entirely different now. I decided against the differential since hobby servos don't really provide great feedback on how fast they're moving (I'd have to upgrade to a nicer set of servos like Dongbu's Herculex) and have designed the new head around a typical pan-tilt mechanism made with aluminum servo mounts from ServoCity. Currently working on fabricating the chassis and frame to hold everything together so that I'll have all the hardware by the week after spring break and be able to start programming full body responses to human gestures. The drive train consists of a 3D printed frame with sprocket and plastic conveyor belt 820 chain from McMaster-Carr and some custom made delrin sprockets riding on Stainless 304 shaft.

WALL-E, Partially Constructed and Reacting to Gestures

Phase Three: Fall 2012

I learned my lesson about stretching myself too thin during Spring 2012. My main goal for the Fall 2012 semester was to develop the controls software in LABVIEW through the Robotics I class, focus on debugging the hardware and linking human actions to autonomous reactions. WALL-E was presented at Olin's EXPO on December 17, 2012-- a brief demo video showcasing Leeds University's Kinesthesia Toolkit and the current design of the eyes is below.

|

|

Kinect + WALL-E working demo Fall 2012

WALL-E, Continued: Spring 2012

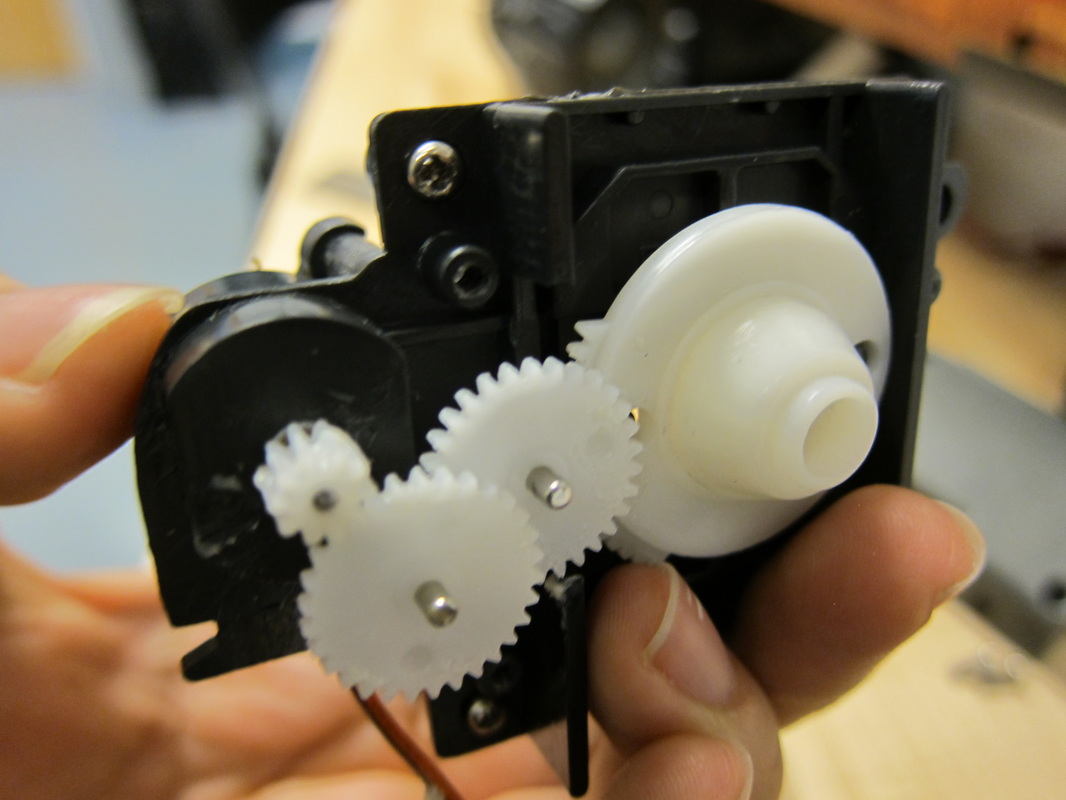

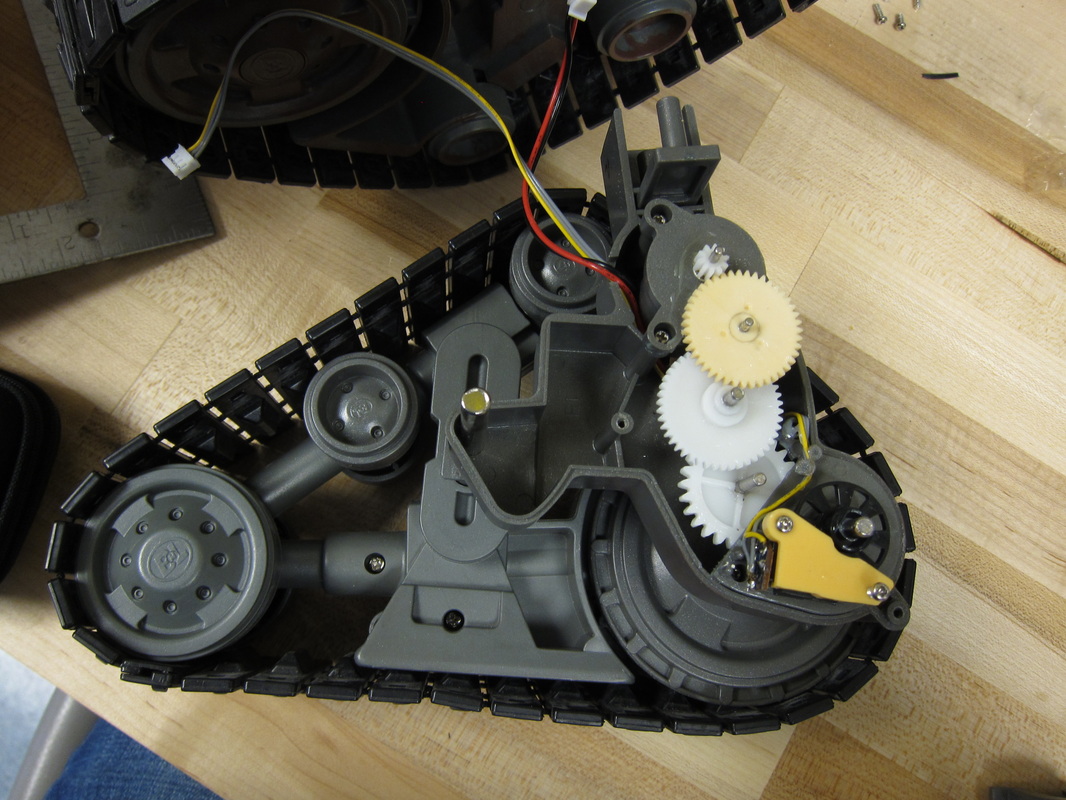

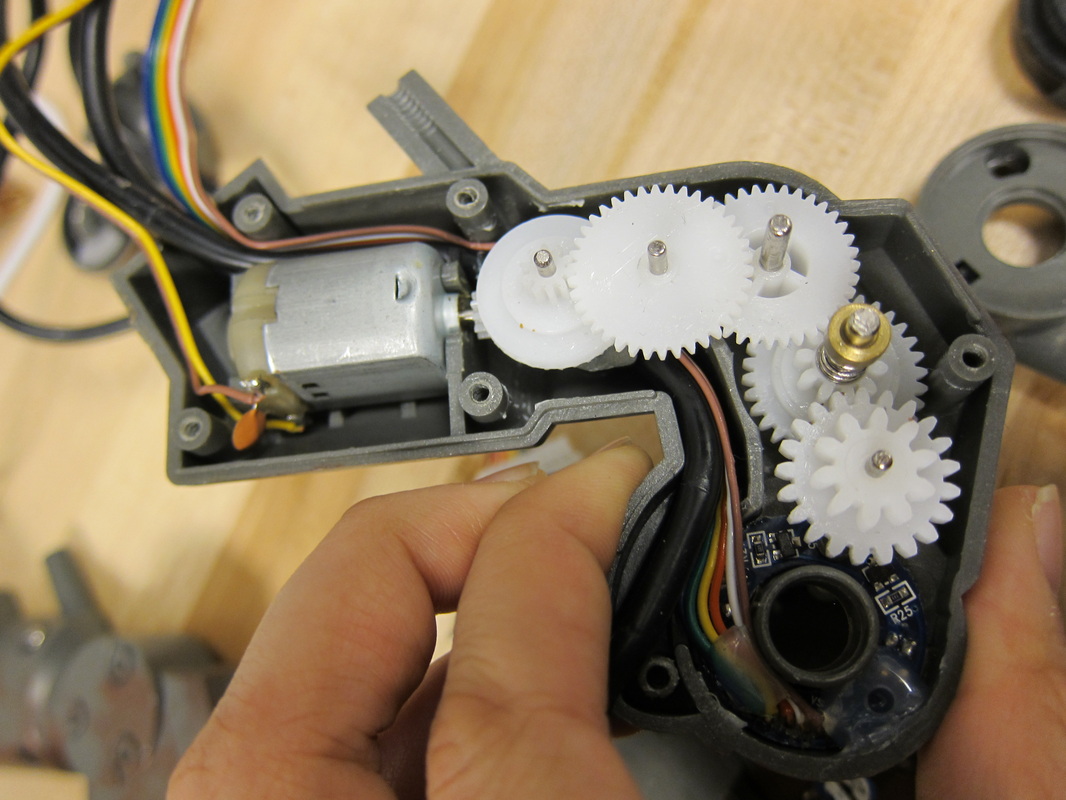

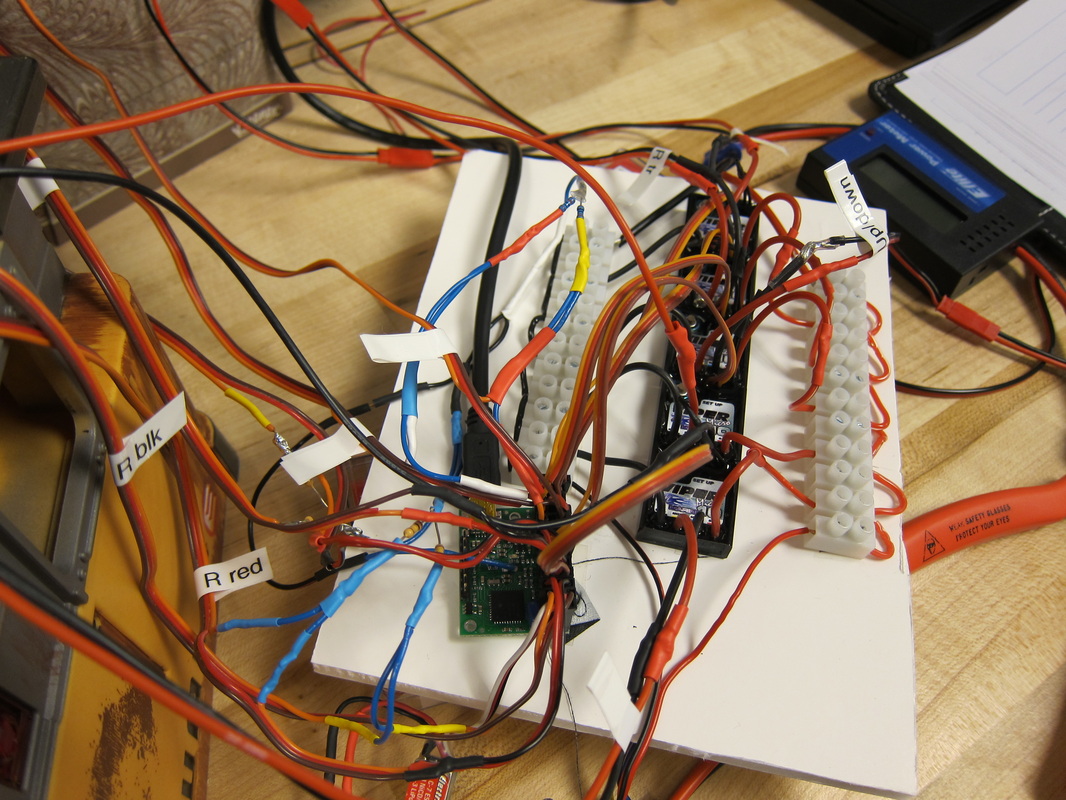

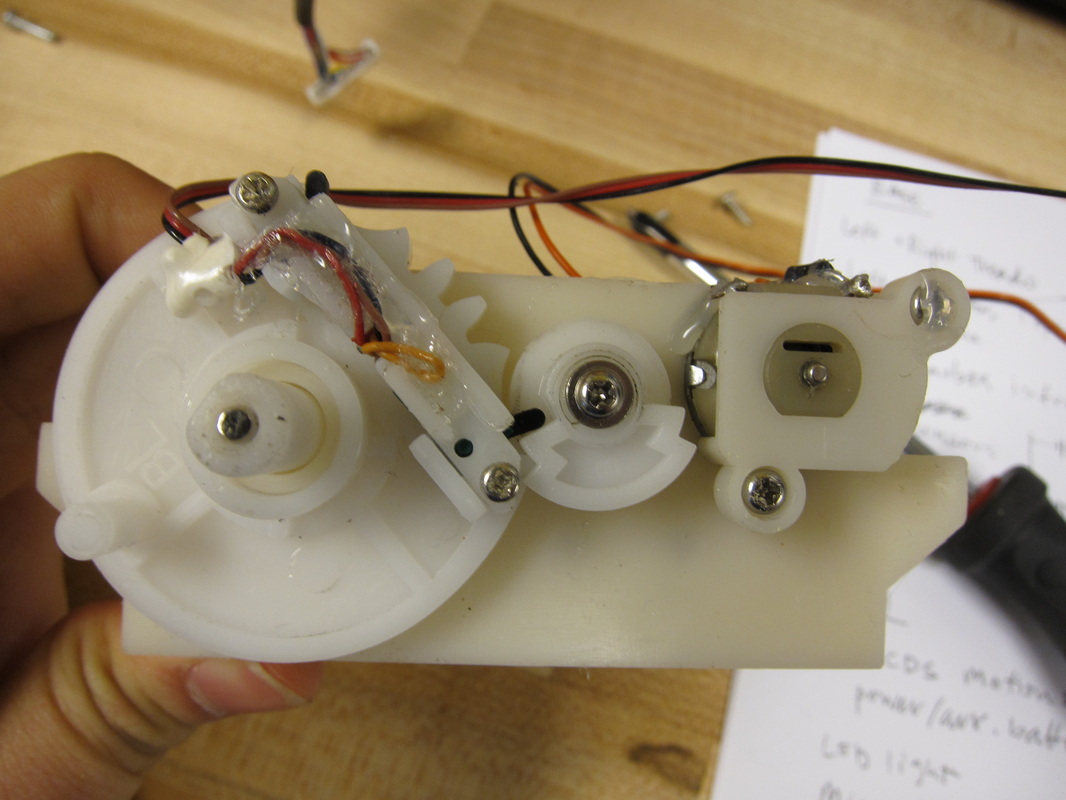

Starting up again in the spring, I saw that a SCOPE project from the previous year that used a FIT PC and a Kinect did not seem to be the magical solution we were all hoping for. With an emphasis on wrapping up WALL-E, I went back to the drawing board. The combination of FIT PCs, the Kinect, and LABVIEW seemed too sluggish for an interactive robot, and at the time could not find many solutions that could properly fit into the desired form factor. I decided that it would be easier to run WALL-E through wireless communication between my laptop and and an Arduino. By the end of the semester I could trigger sounds and movements in Wall-e through motions picked up by the Kinect. The plan was to use Pololu's wixels to communicate between my laptop and the arduino and an audioshield to drive the speaker. WALL-E's arms have been modified for 180 degrees of freedom by replacing the gearbox with a servo.

In the beginning....

WALL-E started out as an Ultimate Wall-e toy that sat underneath a table in Drew Bennett's lab, untouched and in pristine condition. Looking for something to do a Passionate Pursuit, I asked Drew about projects I could work on with him. He mentioned hacking WALL-E and taking advantage of the hardware he had to make it a fully autonomous robot.

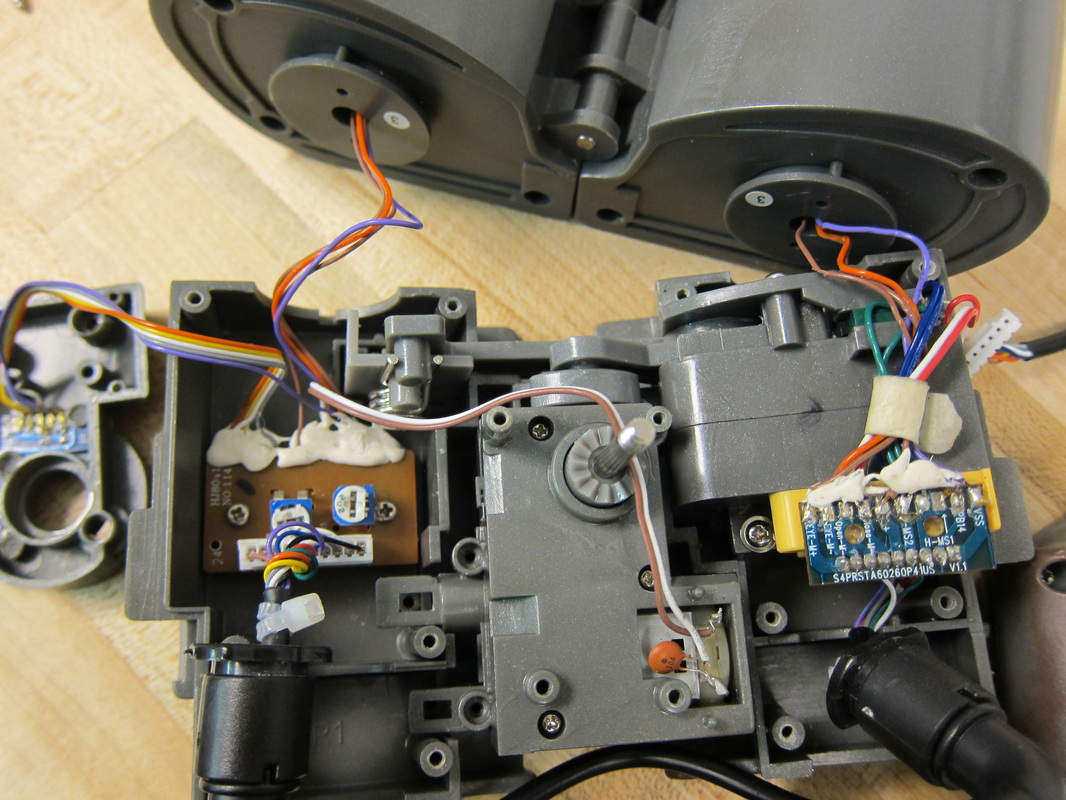

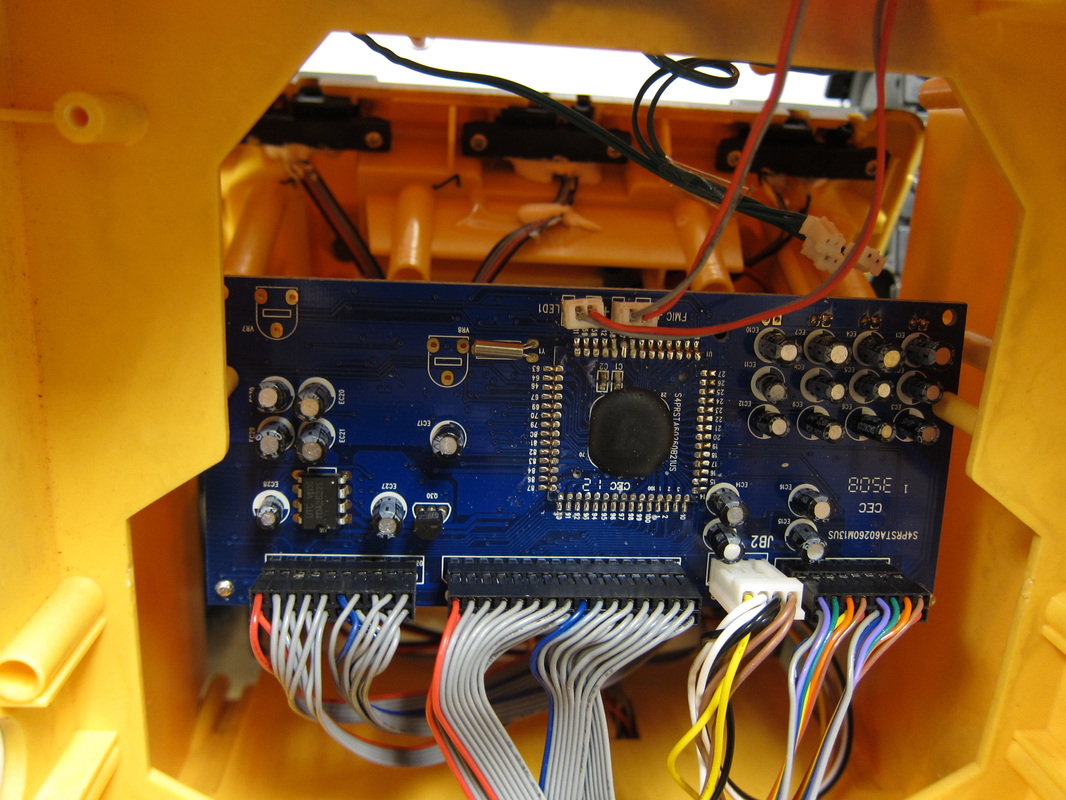

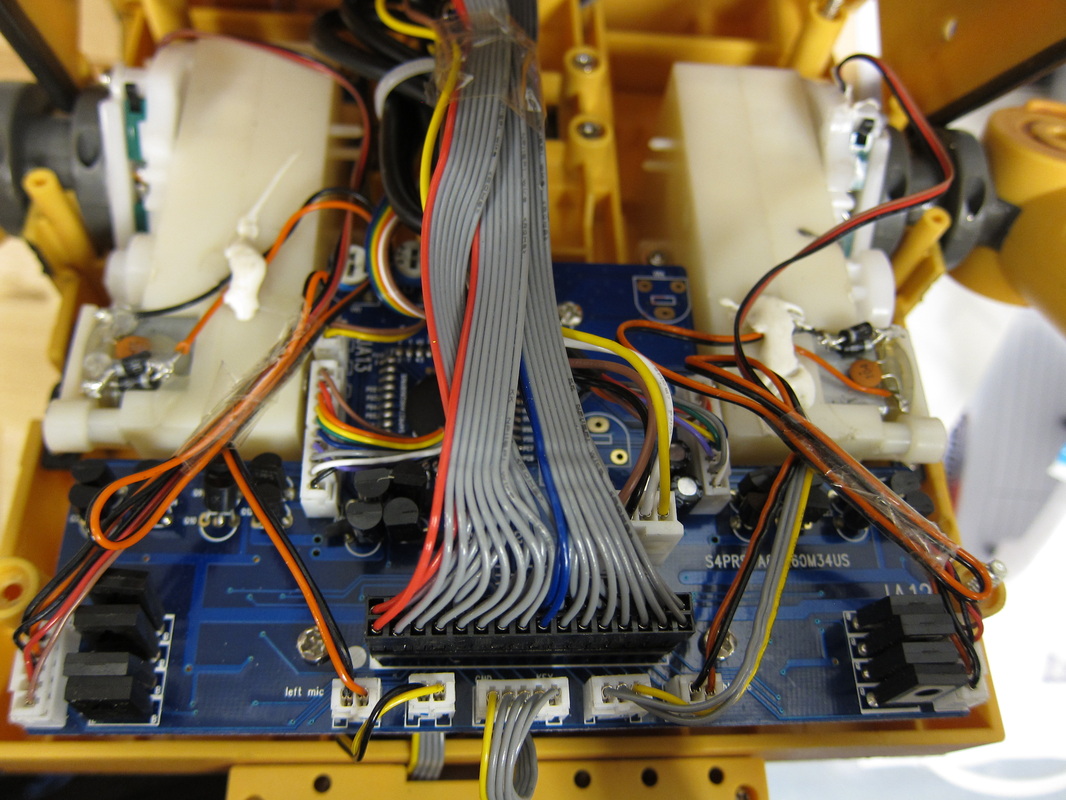

Since February 2011, I've worked on building documentation on WALL-E's electronics and mechanical elements. The first couple of months were all about setting WALL-E up to see if we could re-program him. The initial goal was to tap into the boards that were already in WALL-E and communicate with the main chip (state of the art technology, in 1982). We figured if the factory could fine tune WALL-E's actions through a few pins, we could control him from the same pins too.

After a few weekends of staring at boards, we realized we were making the job much harder than it really was. Most of WALL-E's emotions were probably programmed in assembly code so writing and understanding new code would be more time consuming than just gutting him and starting from scratch. We had a hard time deciding if we really wanted to gut WALL-E since the Ultimate WALL-E toys are now rare and expensive. After purchasing a smaller wall-e to play with and hack, we realized that the Ultimate WALL-E was a pretty good platform to start with, especially if we wanted to attach a Microsoft Kinect to him and make WALL-E fully autonomous.

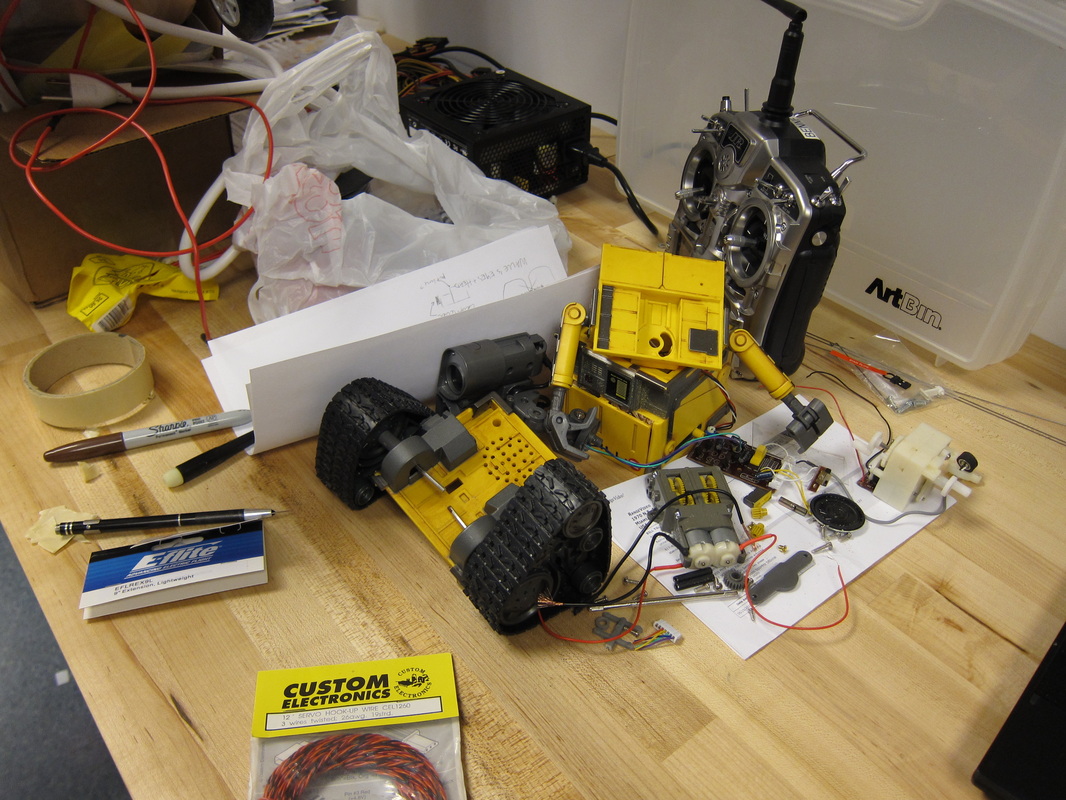

During my summer research internship with Drew, Wall-e developed into a very complicated robotics system, as we made plans to use a Fit PC, kinect, Pololu maestro servo controller, and marine speed controllers (they're reversible), and servo motors inside Wall-e. In order to integrate all those sensors into controlling WALL-E's movements, I had to figure out what the various inputs and outputs were (like WALL-E's arm motor and limit switches). WALL-E had about 9 motors, 3 LEDs, 4 optical encoders, and numerous limit switches inside of him, to name a few.

Below are pictures and a short video on summer research at Olin featuring Drew's lab, our planes, and Wall-e.

Since February 2011, I've worked on building documentation on WALL-E's electronics and mechanical elements. The first couple of months were all about setting WALL-E up to see if we could re-program him. The initial goal was to tap into the boards that were already in WALL-E and communicate with the main chip (state of the art technology, in 1982). We figured if the factory could fine tune WALL-E's actions through a few pins, we could control him from the same pins too.

After a few weekends of staring at boards, we realized we were making the job much harder than it really was. Most of WALL-E's emotions were probably programmed in assembly code so writing and understanding new code would be more time consuming than just gutting him and starting from scratch. We had a hard time deciding if we really wanted to gut WALL-E since the Ultimate WALL-E toys are now rare and expensive. After purchasing a smaller wall-e to play with and hack, we realized that the Ultimate WALL-E was a pretty good platform to start with, especially if we wanted to attach a Microsoft Kinect to him and make WALL-E fully autonomous.

During my summer research internship with Drew, Wall-e developed into a very complicated robotics system, as we made plans to use a Fit PC, kinect, Pololu maestro servo controller, and marine speed controllers (they're reversible), and servo motors inside Wall-e. In order to integrate all those sensors into controlling WALL-E's movements, I had to figure out what the various inputs and outputs were (like WALL-E's arm motor and limit switches). WALL-E had about 9 motors, 3 LEDs, 4 optical encoders, and numerous limit switches inside of him, to name a few.

Below are pictures and a short video on summer research at Olin featuring Drew's lab, our planes, and Wall-e.

|

|